Vision Framework Software Development¶

This page provides the instructions needed to setup a development environment for the MityDSP-L138F Vision Framework.

Install / Fetch the required software¶

- Install Code Composer Studio Version 5.2 or higher

- Install the MDK/Board Support Package Version 2011_12

- Download the Vision Framework Root Filesystem, version 2011_12

- Download the MityDSP-L138F ARM GCC ToolChain Release 2010_11

- Download the Vision Framework FPGA and Software Project Files, version 2011_12

Setup NFS Root Filesystem¶

Note : There are many mechanisms to mount a root filesystem for development, including NFS, MMC, NAND, SATA, USB Stick, and others. Critical Link's typical development cycle is to boot a development system via and NFS shared drive on a linux server. When the software is ready for deployment, the filesystem may then be transferred to the final media. This section describes using NFS for development. If this is not an option and you need assistance, please contact Critical Link directly.

- Install the nfs-server on your VM

user@vm # sudo apt-get install nfs-kernel-server

- Setup an NFS mount point

user@vm # cd ${HOME} user@vm # mkdir vf_root user@vm # cd vf_root user@vm # sudo tar xjf path/to/vision_framework_rootfs_2011-12-05.tar.bz2 - This should result in the following directory structure under /vf_root/

bin dev home linuxrc mnt sbin tmp var boot etc lib media proc sys usr

- Edit the file "/etc/exports" and include a line for your NFS share with

/home/user/vf_root *(rw,no_subtree_check,no_root_squash)

- Reload the NFS server with the new share

user@vm # sudo /etc/init.d/nfs-kernel-server restart

Configure MityDSP-L138 to Boot From NFS Server¶

- Verify the IP address of your Development Environment, ifconfig from a terminal. This is the IP address of your server.

- Make sure VDK is on the same local area network as your development PC, power up, and stop in u-Boot.

- Update the boot command line and arguments to load kernel image from NFS root filesystem.

U-Boot > setenv ipaddr=your.module.ip.addr U-Boot > setenv bootcmd "nfs c0700000 your.server.ip.addr:/home/user/vf_root/boot/uImage; bootm" U-Boot > setenv bootargs mem=96M console=ttyS1,115200n8 rootfstype=nfs root=/dev/nfs nfsroot=your.server.ip.addr:/home/user/vf_root rw,nolock ip=dhcp U-Boot > saveenv

Boot the unit.¶

Run "boot" from u-Boot. If everything is set up correctly, you should observe the unit load the kernel from the NFS mount and boot up to a kernel prompt. Login as root and run the demonstration application:

root@mitydsp# cd vision_demo root@mitydsp# ./run_vision.sh

You should see the application fire up and the monitor should be showing Qt Application running.

Compiling the Software¶

The software for the vision framework includes the necessary CCSV5.2 ARM and DSP projects that can be loaded that should work "out of the box" . Details about importing the projects and setting them up in the workspace is included in the sections below. The vision_framework directory structure is described below:

hw/ hw/fpga -> files needed to build the FPGA hw/fpga/build_lx16_dvi -> files needed to build the LX16 FPGA using the DVI interface hw/fpga/build_lx16_nec -> files needed to build the LX16 FPGA using the NEC WQVGA touch screen panel sw/ sw/common -> contains common files between the ARM and DSP projects (message definitions) sw/ARM -> project folder for the ARM Qt application sw/DSP -> project folder for the DSP DSP/BIOS application /sys /sys/doc -> Marketing documents for this product

Setting up the DSP Vision Project¶

These instructions are for using linux as your primary development station. There is no reason that this code should not be buildable on a Windows environment, but you will need to extract the tarballs and MDK information and make them available on the Windows machine.

- untar the vision framework tarball.

user @ linuxvm: tar xjf vision_framework_2011_12_05.tar.bz2

- Launch Code Composer Studio Version 5.2.

- Import the DSP Portion of the application. Select File->import. Select the Code Composer Studio Project Type and choose Existing CCS/CCE Eclipse Projects. Hit "Next". Browse to the vision_framework/sw/DSP directory and hit "OK". The tool should have discovered the vision_dsp application. Check it and click "Finish".

This assumes that the MDK was installed at your home directory. If it was not, then you will need alter the path to the MDK by modifying the Properties->Build Variables->MDK variable to point to the base of the directory you stored the MDK to.

Setting up the ARM Project (using CCS v5.2)¶

These instructions are for using linux as your primary development station. There is no reason that this code should not be buildable on a Windows environment, but you will need to extract the tarballs and MDK information and make them available on the Windows machine.

- untar the vision framework tarball (should have been done in the previous step)

user @ linuxvm: tar xjf vision_framework_2011_12_05.tar.bz2

- Launch Code Composer Studio Version 5.2.

- Import the ARM Portion of the application. Select File->import. Select the Qt Project Type and choose Qt Project. Hit "Next". Browse to the vision_framework/sw/ARM directory and select the "vision.pro" project. The tool should have discovered the vision application. Check it and click "Finish".

This assumes that the MDK was installed at your home directory. If it was not, then you will need alter the path to the MDK by modifying the Properties->Build Variables->MDK variable to point to the base of the directory you stored the MDK to.

Example : Adding a Custom DSP Algorithm¶

This example shows adding a simple algorithm that sets all camera pixels (from a monochrome 10 bit camera) that exceed a given value to red on the display while converting the rest of the pixels to a grayscale 5-6-5 color pattern. There are 3 steps involved with the process.

- Define a new algorithm in the shared messages area.

- Update the algorithm description on the ARM framework.

- Add the algorithm to the DSP framework.

Defining a new algorithm type.¶

In order to use the framework to select our new algorithm, we first need to define a unique index for it that both the ARM and DSP understand. This is done by editing the common file "common/dsp_msgs.h". There are several algorithms defined in the example framework. We will add one at index 10 and modify the file to include the following line:

#define MODE_MAXCLIP_DSP 10

Adding the algorithm to the ARM image.¶

The ARM application framework must be told that this new mode should be added to the available list of modes, and it needs a human readable description of the mode. If needed, the ARM application will also need a GUI menu or some other mechanism to provide configuration data for the new mode (we don't need any for this example, yet). The list of available modes for the application is contained in an array (ModeData[]) located at the top of the ARM/mainwindow.cpp file. Add an entry for our new algorithm just before the MODE_IDLE entry (which must be at the bottom):

... (from tsModeDAta ModeData[] Array) ...

{MODE_EDGEDETECT_DSP,

"Sobel 3x3 Edge Detection (DSP) : In this mode the camera\n"

"data is treated as monochrome and a 3x3 Sobel Edge\n"

"Detection algorithm is run on the DSP with the results\n"

"converted to a grayscale 5-6-5 (32 level) color map\n"

"for display."},

{MODE_MAXCLIP_DSP,

"Converts Monochroma camera data to 5-6-5 grayscale data for\n"

"display. All values above 1200 will be set to red.\n"},

{MODE_IDLE,

"Idle Mode"}, /* must be last */

};

Adding the algorithm to the DSP image.¶

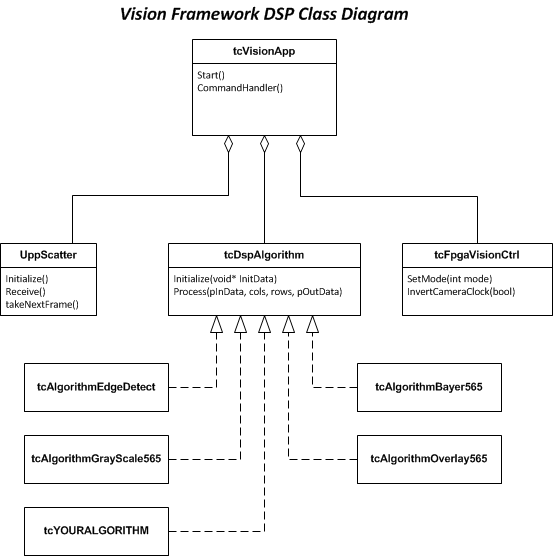

The figure below illustrates the top level C++ class diagram of the DSP portion of the vision processing framework. From the figure, there are 4 main classes to be concerned with:

- tcVisionApp - this is the top level application class and is responsible for coordinating startup and shutdown, and handling messages received from the ARM.

- UppScatter - this class provides a simple control API to the OMAP-L138 UPP peripheral. It configures a triple buffered continuous DMA for the camera data and provides a mechanism for the tcVisionApp class to request framedata during runtime.

- tcFpgaVisionCtrl - this class provides a simple control API to the MityDSP-L138F FPGA core that selects the FPGA algorithms and configuration to run.

- tcDspAlgorithm - this is a pure virtual class that represents the API for user defined algorithms that the DSP may run in the framework. The vision application framework provides a couple of example algorithms derived from this class (edge detection, monochrome to RGB-5-6-5 gray scale conversion, bayer pattern to RGB-5-6-5 conversion, etc). Normally, you would derive your own class from this to add new processing techniques.

For this example, we will derive a tcAlgorithmMaxClip object and define our own Initialize and Process methods as shown in the example snippet below.

Add tcAlgorithmMaxClip.h header file.

Add tcAlgorithmMaxClip.cpp implementation file.

Go to top